AIWorries |

Edit |

Fancois Chollet is/was an engineer at Google. In this article he writes about what his fears of AI might be.

But what if we're worrying about the wrong thing, like we have almost every single time before? What if the real danger of AI was far remote from the "superintelligence" and "singularity" narratives that many are panicking about today? In this post, I'd like to raise awareness about what really worries me when it comes to AI: the highly effective, highly scalable manipulation of human behavior that AI enables, and its malicious use by corporations and governments.

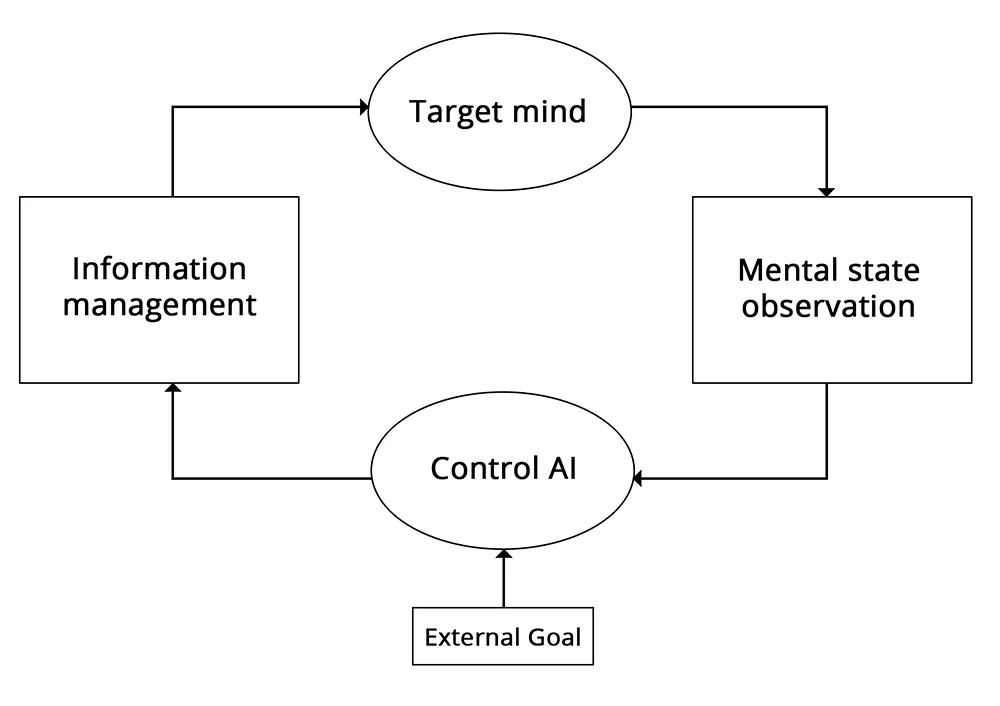

...[social network companies can simultaneously measure everything about us, and control the information we consume.]{.mark} And that's an accelerating trend. When you have access to both perception and action, you're looking at an AI problem. You can start establishing an optimization loop for human behavior, in which you observe the current state of your targets and keep tuning what information you feed them, until you start observing the opinions and behaviors you wanted to see. A large subset of the field of AI --- in particular "reinforcement learning" - is about developing algorithms to solve such optimization problems as efficiently as possible, to close the loop and achieve full control of the target at hand - in this case, us. [By moving our lives to the digital realm, we become vulnerable to that which rules it - AI algorithms.]{.mark}

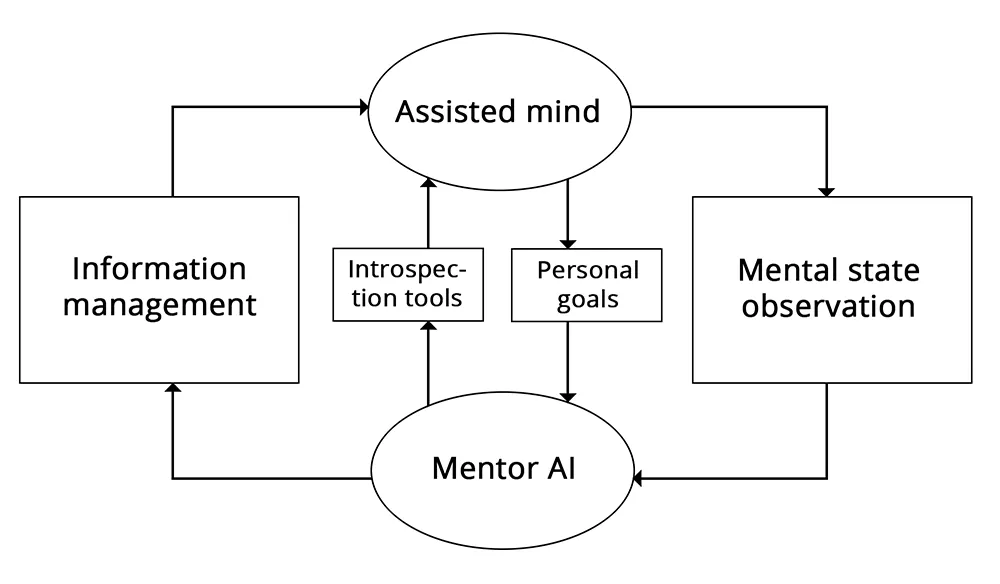

We should build AI to serve humans, not to manipulate them for profit or political gain. What if newsfeed algorithms didn't operate like casino operators or propagandists? What if instead, they were closer to a mentor or a good librarian, someone who used their keen understanding of your psychology - and that of millions of other similar people - to recommend to you that next book that will most resonate with your objectives and make you grow. A sort of navigation tool for your life.

Here's an idea - any algorithmic newsfeed with significant adoption should:

- Transparently convey what objectives the feed algorithm is currently optimizing for, and how these objectives are affecting your information diet.

- Give you intuitive tools to set these goals yourself. For instance, it should be possible for you to configure your newsfeed to maximize learning and personal growth - in specific directions.

- Feature an always-visible measure of how much time you are spending on the feed.

- Feature tools to stay control of how much time you're spending on the feed - such as a daily time target, past which the algorithm will seek to get you off the feed.